Vibe coding in Xcode 26: is it good?

You’re more of a video kind of person? I’ve got you covered! Here’s a video with the same content than this article 🍿

Last week I recorded a livestream where I spent one hour and a half exploring the new vibe coding feature of Xcode.

I tried to use the feature to help me build a simple iOS app, that lets the user answer a few quiz questions.

My goal was to try and write as little code manually as I could, so as to rely on the AI as much as possible.

And from that experience I want to share with you what worked well, what didn’t and what got me really disappointed.

Advertisement

RevenueCat makes adding subscriptions to your app simple🚀

Never worry about StoreKit 🤦♂️📱

Plus, get out-of-the-box charts and reporting for your app 📈📊

Sponsors like RevenueCat really help me grow my content creation, so if you have time please make sure to check out their product: it’s a direct support to my content creation ☺️

Let’s start with the good parts: I was indeed able to produce a simple but fully functioning quiz app in less than one hour.

Here’s what the final result looked like:

Considering that I spent quite some time commenting what I was doing, you could probably achieve the same result even faster.

I also really liked that the AI is able to handle images in addition to text.

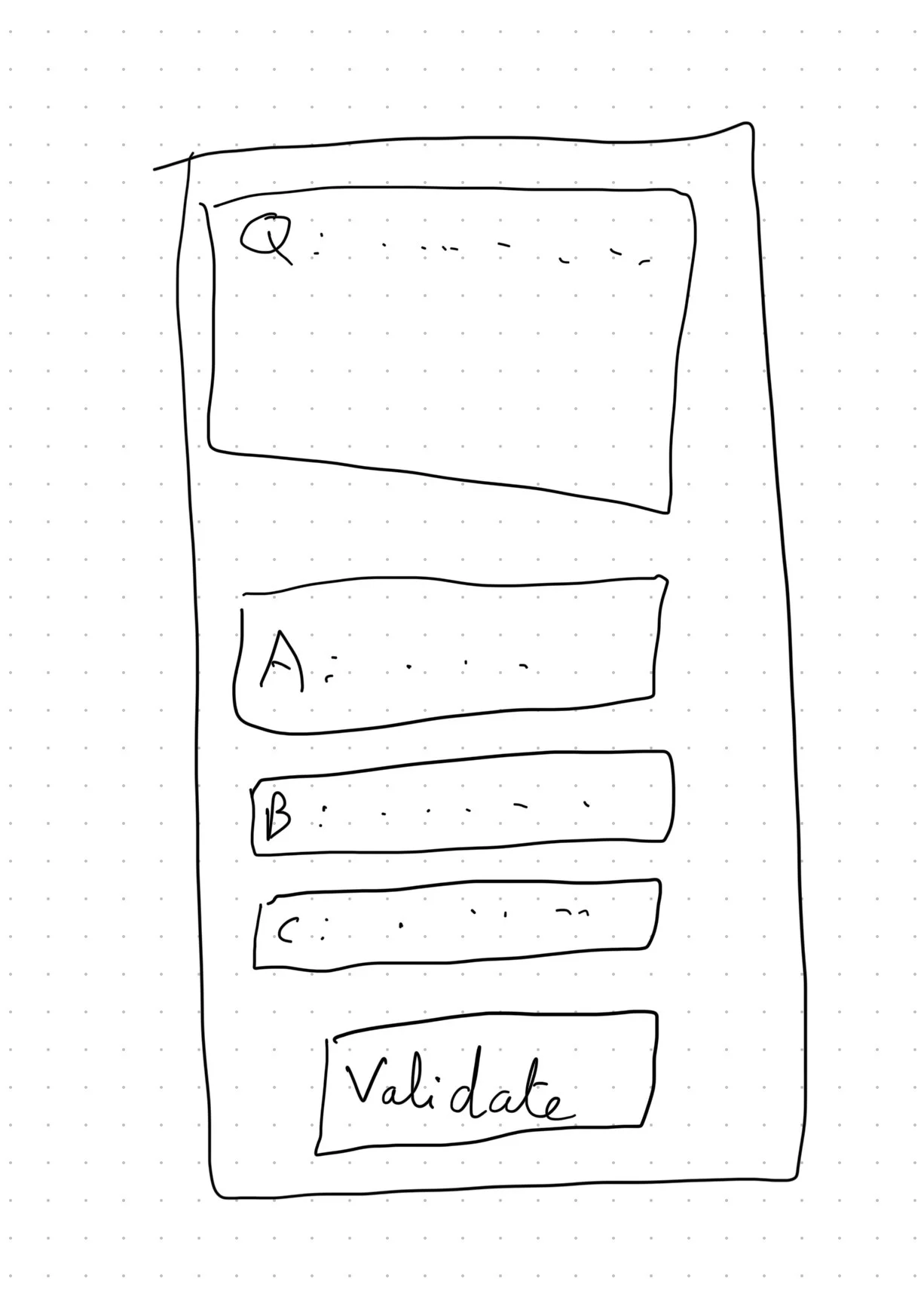

To express how I wanted the UI to look like, I used this rough mock-up that I had quickly sketched using Freeform:

And the AI perfectly managed to turn it into a SwiftUI view that follows the basic human interface guidelines of iOS.

This leads me to think that the vibe coding feature of Xcode 26 will be a great tool when we want to quickly prototype an idea.

Now let’s talk about the bad parts.

I asked the AI to add a time limit for answering each question.

I knew that SwiftUI has a specific modifier to animate a Text that displays a decreasing numeric counter, but I couldn’t recall its exact name.

So I described what the modifier does and I asked the AI to add it.

You can watch the full interaction at this timecode, but here’s the short version: the AI hallucinated a modifier that does not exist!

This is a reminder that all the known limitations of AI models around rapidly evolving frameworks like SwiftUI are still very well present!

Luckily, in simpler cases Xcode is able to provide the AI some guardrails, thanks to the hidden system prompt it injects in every conversation.

I’ll share more about this hidden system prompt in a future piece of content, but if you’re curious I recommend reading this article.

To finish let’s talk about the ugly parts, that got me really disappointed.

I’ve been using Cursor quite a lot at work for almost a year, so I’ve gotten quite accustomed to its UX for handling generated code.

And I was quite disappointed to see the gaps between the UX of Xcode versus that of Cursor!

Even more so because we’re talking about traditional software features, that are completely orthogonal to having good AI models.

Here’s a list of features that I really like in Cursor and that I couldn’t find in Xcode:

1. Automatically creating checkpoints for each new user prompt, so that it’s easy to revert to an earlier state of the codebase.

2. Being able to review and accept/refuse the proposed changes in the code editor: Xcode only offers to either automatically accept all the changes or to manually review them in the chat conversation which is quite clunky.

3. The AI “fix me” feature is quite dumb at handling cross file context: after moving a constant to a separate file, using this feature to update code that referred to the constant resulted in the AI trying not only to add back a duplicate of the constant in the original file, but also set it a different value!

So what’s my feedback on vibe coding in Xcode 26?

I really liked how I was able to turn a rough UI sketch into a working prototype quite quickly and reliably.

For my own needs of creating sample apps to illustrate my content, I think I’ll be relying a lot on this feature!

However it’s important to keep in mind that the results we get are still limited by how good models are at generating code for Apple platforms.

Luckily it’s possible to use other models than the built-in ChatGPT, so when a model produces better results it will be easy to switch.

Finally, if you find yourself using this vibe coding feature a lot, I would recommend that you take the time to give Cursor a try.

Its UX for vibe coding is currently definitely superior to that of Xcode, and I really hope that Xcode will improve by the time of its final release 🤞

To conclude, if you haven’t been able to install macOS 26 beta but are still curious to see what it’s like to use the vibe coding feature, I recommend that you watch the replay of my livestream!